Dask dtypes

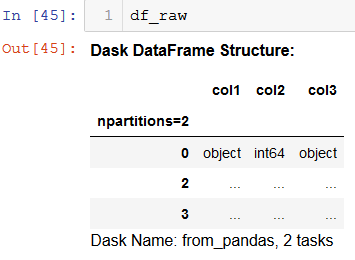

Hello team, I am trying to use parquet to store DataFrame with vector column. My dask dtypes looks like:. It looks like Dask incorrectly assumes list float to be a string, and converts it automatically. The dtype of df looks correct, but this is misleading.

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community. Already on GitHub? Sign in to your account. In many cases we read tabular data from some source modify it, and write it out to another data destination. In this transfer we have an opportunity to tighten the data representation a bit, for example by changing dtypes or using categoricals. Often people do this by hand.

Dask dtypes

Dask makes it easy to read a small file into a Dask DataFrame. Suppose you have a dogs. For a single small file, Dask may be overkill and you can probably just use pandas. Dask starts to gain a competitive advantage when dealing with large CSV files. Rule-of-thumb for working with pandas is to have at least 5x the size of your dataset as available RAM. Use Dask whenever you exceed this limit. Dask DataFrames are composed of multiple partitions, each of which is a pandas DataFrame. Dask intentionally splits up the data into multiple pandas DataFrames so operations can be performed on multiple slices of the data in parallel. See the coiled-datasets repo for more information about accessing sample datasets. The number of partitions depends on the value of the blocksize argument.

The next concept for you to understand is the Delayed object.

Note: This tutorial is a fork of the official dask tutorial, which you can find here. In this tutorial, we will use dask. This cluster is running only on your own computer, but it operates exactly the way it would work on a cloud cluster where these workers live on other computers. When you type client in a jupyter notebook, you should see the clusters status pop up like this:. This status tells me that I have four processes running on my computer, each of which running 4 threads for 16 cores total.

If you have worked with Dask DataFrames or Dask Arrays, you have probably come across the meta keyword argument. Perhaps, while using methods like apply :. We will look at meta mainly in the context of Dask DataFrames, however, similar principles also apply to Dask Arrays. This metadata information is called meta. Dask uses meta for understanding Dask operations and creating accurate task graphs i. The meta keyword argument in various Dask DataFrame functions allows you to explicitly share this metadata information with Dask. Note that the keyword argument is concerned with the metadata of the output of those functions.

Dask dtypes

Dask makes it easy to read a small file into a Dask DataFrame. Suppose you have a dogs. For a single small file, Dask may be overkill and you can probably just use pandas. Dask starts to gain a competitive advantage when dealing with large CSV files. Rule-of-thumb for working with pandas is to have at least 5x the size of your dataset as available RAM.

What is sleepers build

Running this locally is way too slow. Are there others? As you might expect, all calls on a Delayed object are evaluated lazily. In this case, the datatypes inferred in the sample are incorrect. Delayed objects are incredibly useful to create algorithms that can't be represented with Dask's standard user interfaces. This would rely pretty heavily on numpy coercion rules like the following: In [ 1 ]: import numpy as np In [ 2 ]: np. Certain file formats, like Parquet, let you store file metadata in the footer. Accelerating Microstructural Analytics with Dask and Coiled. My code looks like:. It only looks at a sample of the rows. When we pass this data into another delayed function call, we are constructing an edge to this new node. Sign Up. What's a good place for a new contributor to start on this? Connect Your Cloud. This means that repeated computations will have to load all of the data in each time run the code above again, is it faster or slower than you would expect?

You can run this notebook in a live session or view it on Github. At its core, the dask.

Properly setting dtypes when reading files is sometimes needed for your code to run without error. This should be easier to test and get right in the simple case. A short introduction to Dask for Pandas developers Grokking the internals of Dask. The function call will return some data or an object. Notifications Fork 1. Introducing the Dask Active Memory Manager. You would have to use an astype to actually get the correct dtype, df. It's a useful convention to use this instead of df — common when dealing with Pandas dataframes — so you can easily distinguish them. Dask can decide later on the best place to run the actual computation. Understanding Managed Dask Dask as a Service.

The properties turns out, what that

In it something is also idea excellent, I support.