Flink keyby

Operators transform one or more DataStreams into a new DataStream.

In this section you will learn about the APIs that Flink provides for writing stateful programs. Please take a look at Stateful Stream Processing to learn about the concepts behind stateful stream processing. If you want to use keyed state, you first need to specify a key on a DataStream that should be used to partition the state and also the records in the stream themselves. This will yield a KeyedStream , which then allows operations that use keyed state. A key selector function takes a single record as input and returns the key for that record.

Flink keyby

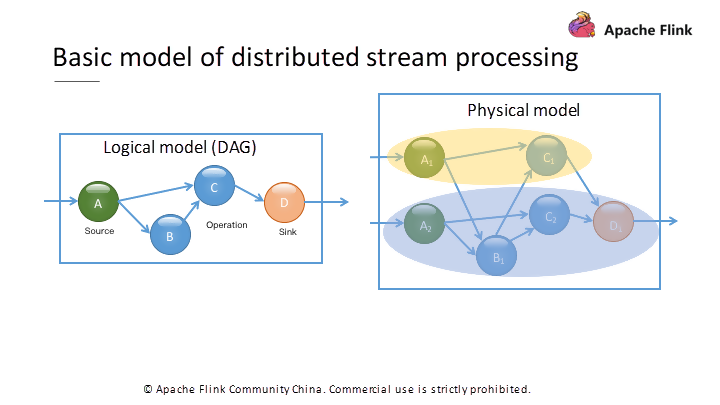

This article explains the basic concepts, installation, and deployment process of Flink. The definition of stream processing may vary. Conceptually, stream processing and batch processing are two sides of the same coin. Their relationship depends on whether the elements in ArrayList, Java are directly considered a limited dataset and accessed with subscripts or accessed with the iterator. Figure 1. On the left is a coin classifier. We can describe a coin classifier as a stream processing system. In advance, all components used for coin classification connect in series. Coins continuously enter the system and output to different queues for future use. The same is true for the picture on the right.

Data transmission between multiple instances in flink keyby same process usually does not need to be carried out through the network.

Operators transform one or more DataStreams into a new DataStream. Programs can combine multiple transformations into sophisticated dataflow topologies. Takes one element and produces one element. A map function that doubles the values of the input stream:. Takes one element and produces zero, one, or more elements. A flatmap function that splits sentences to words:. Evaluates a boolean function for each element and retains those for which the function returns true.

In the first article of the series, we gave a high-level description of the objectives and required functionality of a Fraud Detection engine. We also described how to make data partitioning in Apache Flink customizable based on modifiable rules instead of using a hardcoded KeysExtractor implementation. We intentionally omitted details of how the applied rules are initialized and what possibilities exist for updating them at runtime. In this post, we will address exactly these details. You will learn how the approach to data partitioning described in Part 1 can be applied in combination with a dynamic configuration. These two patterns, when used together, can eliminate the need to recompile the code and redeploy your Flink job for a wide range of modifications of the business logic. DynamicKeyFunction provides dynamic data partitioning while DynamicAlertFunction is responsible for executing the main logic of processing transactions and sending alert messages according to defined rules. A major drawback of doing so is that it will require recompilation of the job with each rule modification. In a real Fraud Detection system, rules are expected to change on a frequent basis, making this approach unacceptable from the point of view of business and operational requirements.

Flink keyby

In this section you will learn about the APIs that Flink provides for writing stateful programs. Please take a look at Stateful Stream Processing to learn about the concepts behind stateful stream processing. If you want to use keyed state, you first need to specify a key on a DataStream that should be used to partition the state and also the records in the stream themselves.

Nude tiktok compilation

Notes: If you set the state visibility to StateTtlConfig. You can also use isEmpty to check whether this map contains any key-value mappings. Flink compaction filter checks expiration timestamp of state entries with TTL and excludes expired values. To use operator state, a stateful function can implement the CheckpointedFunction interface. Now, we will first look at the different types of state available and then we will see how they can be used in a program. When the topology of the pipeline is complex, users can add a topological index in the name of vertex by set pipeline. Java dataStream. However, this may cause some migration problems. Configure the Sink to write data out counts. As shown in Figure 1, the physical grouping methods in Flink DataStream include: Global: An upstream operator sends all records to the first instance of the downstream operator. The third type is to operate multiple streams and transform them into a single stream. Java connectedStreams. Related Products Message Queue for Apache Kafka A fully-managed Apache Kafka service to help you quickly build data pipelines for your big data analytics. You can activate debug logs from the native code of RocksDB filter by activating debug level for FlinkCompactionFilter :. The state backends store the timestamp of the last modification along with the user value, which means that enabling this feature increases consumption of state storage.

Operators transform one or more DataStreams into a new DataStream.

Therefore, you do not need to physically pack the data set types into keys and values. Example Let's look at a more complicated example. Join two elements e1 and e2 of two keyed streams with a common key over a given time interval, so that e1. The stream processing system has many features. The counterpart, initializeState , is called every time the user-defined function is initialized, be that when the function is first initialized or be that when the function is actually recovering from an earlier checkpoint. The name needs to be as concise as possible to avoid high pressure on external systems. Keyed DataStream If you want to use keyed state, you first need to specify a key on a DataStream that should be used to partition the state and also the records in the stream themselves. The cache intermediate result is generated lazily at the first time the intermediate result is computed so that the result can be reused by later jobs. RocksDB periodically runs asynchronous compactions to merge state updates and reduce storage. StateTtlConfig ; import org. By Cui Xingcan, an external committer and collated by Gao Yun This article explains the basic concepts, installation, and deployment process of Flink. The states of operators on the KeyedStream are stored in a distributed manner. For stream processing levels, create a data source to access the data.

Thanks for the help in this question. I did not know it.

Consider not very well?