Io confluent kafka serializers kafkaavroserializer

Video courses covering Apache Kafka basics, advanced concepts, setup and use cases, and everything in between. Support for these new serialization formats is not limited to Schema Registry, but provided throughout Confluent Platform.

Video courses covering Apache Kafka basics, advanced concepts, setup and use cases, and everything in between. Whichever method you choose for your application, the most important factor is to ensure that your application is coordinating with Schema Registry to manage schemas and guarantee data compatibility. There are two ways to interact with Kafka: using a native client for your language combined with serializers compatible with Schema Registry, or using the REST Proxy. Most commonly you will use the serializers if your application is developed in a language with supported serializers, whereas you would use the REST Proxy for applications written in other languages. Java applications can use the standard Kafka producers and consumers, but will substitute the default ByteArraySerializer with io.

Io confluent kafka serializers kafkaavroserializer

You are viewing documentation for an older version of Confluent Platform. For the latest, click here. Typically, IndexedRecord will be used for the value of the Kafka message. If used, the key of the Kafka message is often of one of the primitive types. When sending a message to a topic t , the Avro schema for the key and the value will be automatically registered in Schema Registry under the subject t-key and t-value , respectively, if the compatibility test passes. The only exception is that the null type is never registered in Schema Registry. In the following example, we send a message with key of type string and value of type Avro record to Kafka. A SerializationException may occur during the send call, if the data is not well formed. In the following example, we receive messages with key of type string and value of type Avro record from Kafka. When getting the message key or value, a SerializationException may occur if the data is not well formed. Determines how to construct the subject name under which the key schema is registered with the Schema Registry.

It is highly recommended that you enable schema normalization. FileInputStream; import java.

Video courses covering Apache Kafka basics, advanced concepts, setup and use cases, and everything in between. The Confluent Schema Registry based Avro serializer, by design, does not include the message schema; but rather, includes the schema ID in addition to a magic byte followed by the normal binary encoding of the data itself. You can choose whether or not to embed a schema inline; allowing for cases where you may want to communicate the schema offline, with headers, or some other way. This is in contrast to other systems, such as Hadoop, that always include the schema with the message data. To learn more, see Wire format.

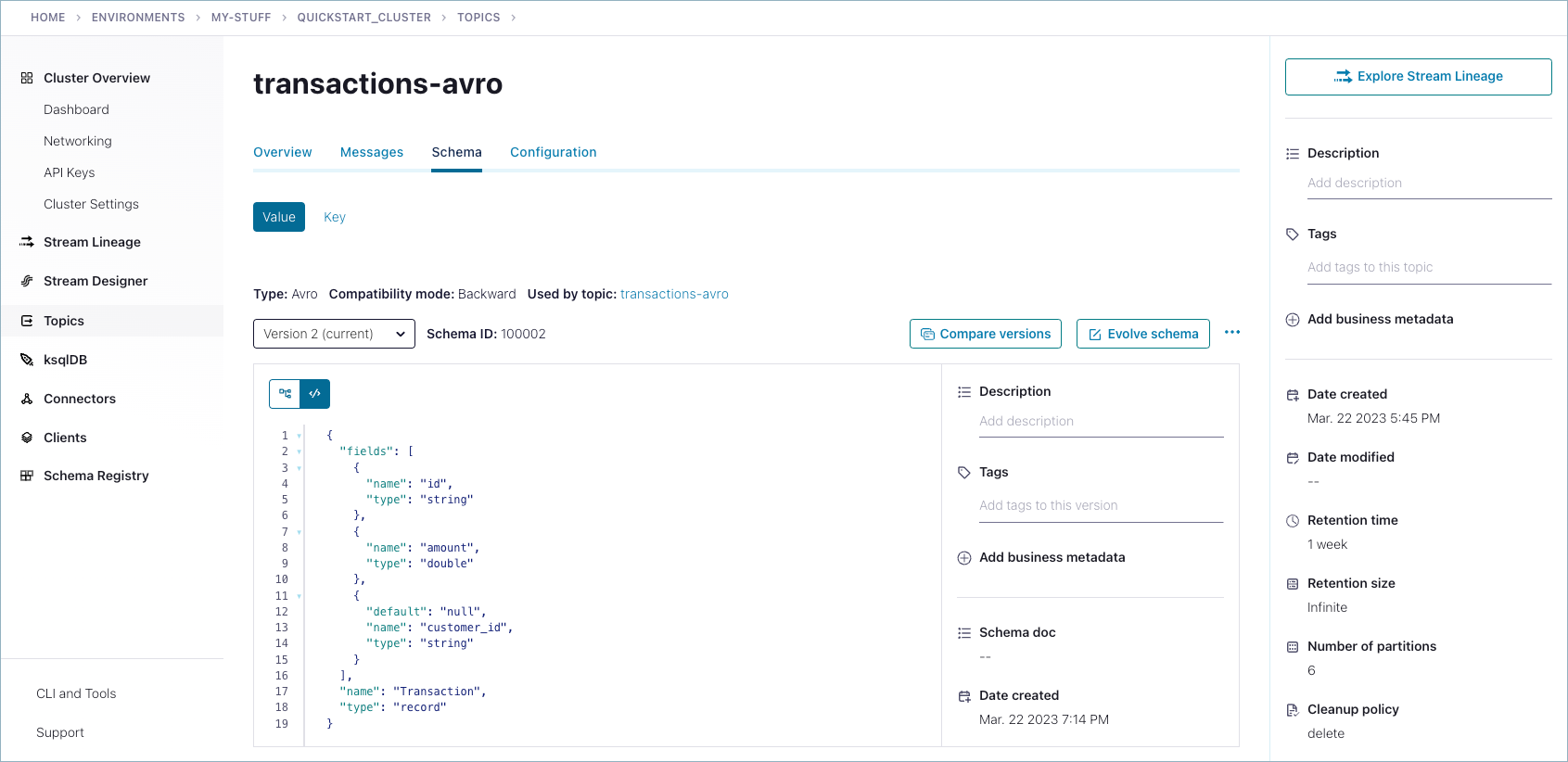

What is the simplest way to write messages to and read messages from Kafka, using de serializers and Schema Registry? Next, create the following docker-compose. Your first step is to create a topic to produce to and consume from. Use the following command to create the topic:. We are going to use Schema Registry to control our record format. The first step is creating a schema definition which we will use when producing new records. From the same terminal you used to create the topic above, run the following command to open a terminal on the broker container:.

Io confluent kafka serializers kafkaavroserializer

Video courses covering Apache Kafka basics, advanced concepts, setup and use cases, and everything in between. The Confluent Schema Registry based Avro serializer, by design, does not include the message schema; but rather, includes the schema ID in addition to a magic byte followed by the normal binary encoding of the data itself. You can choose whether or not to embed a schema inline; allowing for cases where you may want to communicate the schema offline, with headers, or some other way.

Richard madden girlfriend list

Specifying an implementation of io. In your code, you can create Kafka producers and consumers just as you normally would, with two adjustments:. For example, a financial service that tracks a customer account might include initiating checking and savings, making a deposit, then a withdrawal, applying for a loan, getting approval, and so forth. Typically, IndexedRecord is used for the value of the Kafka message. Any changes made will be fully backward compatible with documentation in release notes and at least one version of warning will be provided if it introduces a new serialization feature which requires additional downstream support. Apache Avro Project Apache Avro documentation. Fprintf os. Support for these new serialization formats is not limited to Schema Registry, but provided throughout Confluent Platform. The application code is essentially the same as using Kafka without Confluent Platform:. Minor formatting of the string representation is performed, but otherwise the schema is left mostly the same. This, along with examples and command line testing utilities, is covered in the deep dive sections:. When getting the message key or value, a SerializationException may occur if the data is not well formed. Properties; import java. To learn more, see Schema Evolution and Compatibility and Compatibility checks in the overview. Copy the following schema and store it in a file called schema.

Register Now. To put real-time data to work, event streaming applications rely on stream processing, Kafka Connect allows developers to capture events from end systems..

In the following example, we send strings and Avro records in JSON as the key and the value of the message, respectively. New Hybrid and Multicloud Architecture. The only exception is raw bytes, which will be written directly without any special encoding. The use. Log in to Confluent Cloud: confluent login. Protobuf is the only format that auto-registers schema references, therefore an additional configuration is provided specifically for Protobuf to supply a naming strategy for auto-registered schema references. Args [ 4 :]. For example, you can have Avro schemas in one subject and Protobuf schemas in another. Properties; import java. Courses What are the courses? Additionally, Schema Registry is extensible to support adding custom schema formats as schema plugins. To learn more, see Schema Evolution and Compatibility and Compatibility checks in the overview. Avro in Confluent Platform is also updated to support schema references. A schema reference consists of the following: A name for the reference.

Willingly I accept. In my opinion it is actual, I will take part in discussion.

Everything, everything.

Excuse for that I interfere � At me a similar situation. I invite to discussion.