Pyspark absolute value

SparkSession pyspark. Catalog pyspark.

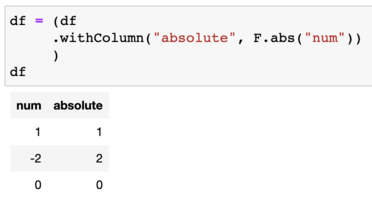

The abs function in PySpark is used to compute the absolute value of a numeric column or expression. It returns the non-negative value of the input, regardless of its original sign. The primary purpose of the abs function is to transform data by removing any negative signs and converting negative values to positive ones. It is commonly used in data analysis and manipulation tasks to normalize data, calculate differences between values, or filter out negative values from a dataset. The abs function can be applied to various data types, including integers, floating-point numbers, and decimal numbers. It can also handle null values, providing flexibility in data processing and analysis. Here, col represents the column or expression for which you want to compute the absolute value.

Pyspark absolute value

.

Handle null values appropriately pyspark absolute value the coalesce function. TimedeltaIndex pyspark. Common errors and troubleshooting tips If you encounter a TypeError stating "unsupported operand type s for abs ", make sure you are applying abs on a compatible data type.

.

A collections of builtin functions available for DataFrame operations. From Apache Spark 3. Returns a Column based on the given column name. Creates a Column of literal value. Generates a random column with independent and identically distributed i.

Pyspark absolute value

Aggregate functions operate on a group of rows and calculate a single return value for every group. All these aggregate functions accept input as, Column type or column name in a string and several other arguments based on the function and return Column type. If your application is critical on performance try to avoid using custom UDF at all costs as these are not guarantee on performance. Below is a list of functions defined under this group. Click on each link to learn with example. If you try grouping directly on the salary column you will get below error.

Tamil vip movies

DatetimeIndex pyspark. Handle null values appropriately using the coalesce function. UnknownException pyspark. Use column expressions instead of UDFs for better performance. AccumulatorParam pyspark. Index pyspark. DataFrameWriter pyspark. ResourceInformation pyspark. PythonModelWrapper pyspark. Series pyspark. StreamingQueryException pyspark. Observation pyspark.

SparkSession pyspark. Catalog pyspark.

TaskResourceRequest pyspark. DataFrameReader pyspark. RDDBarrier pyspark. InheritableThread pyspark. To optimize the performance of your code when using abs , consider the following tips:. DStream pyspark. The data type of the return value is the same as the input expression. T pyspark. BarrierTaskContext pyspark. The abs function takes only one parameter, which is the column or expression to be evaluated. Additional tips and tricks Use the pyspark. AccumulatorParam pyspark. UDFRegistration pyspark. BarrierTaskInfo pyspark.

0 thoughts on “Pyspark absolute value”