Pyspark where

In this PySpark article, you will learn how to apply a filter on DataFrame columns of string, arrays, and struct types by using single and multiple conditions and also pyspark where a filter using isin with PySpark Python Spark examples, pyspark where. Note: PySpark Column Functions provides several options that can be used with filter. Below is the syntax of the filter function.

Send us feedback. This tutorial shows you how to load and transform U. By the end of this tutorial, you will understand what a DataFrame is and be familiar with the following tasks:. Create a DataFrame with Python. View and interact with a DataFrame.

Pyspark where

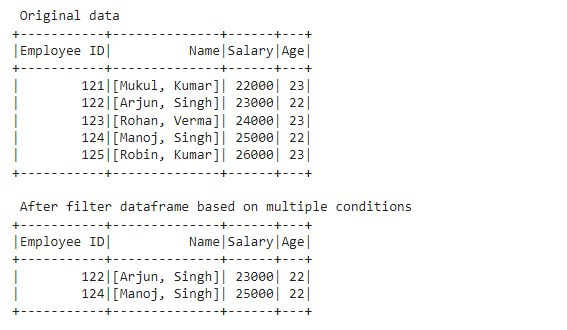

In this article, we are going to see where filter in PySpark Dataframe. Where is a method used to filter the rows from DataFrame based on the given condition. The where method is an alias for the filter method. Both these methods operate exactly the same. We can also apply single and multiple conditions on DataFrame columns using the where method. The following example is to see how to apply a single condition on Dataframe using the where method. The following example is to understand how to apply multiple conditions on Dataframe using the where method. The following example is to know how to filter Dataframe using the where method with Column condition. We will use where methods with specific conditions. Skip to content. Change Language. Open In App. Related Articles.

Save my name, email, pyspark where, and website in this browser for the next time I comment. Glad you are pyspark where the articles. Filter rows in a DataFrame Discover the five most populous cities in your data set by filtering rows, using.

DataFrame in PySpark is an two dimensional data structure that will store data in two dimensional format. One dimension refers to a row and second dimension refers to a column, So It will store the data in rows and columns. Let's install pyspark module before going to this. The command to install any module in python is "pip". Steps to create dataframe in PySpark:.

Spark where function is used to filter the rows from DataFrame or Dataset based on the given condition or SQL expression, In this tutorial, you will learn how to apply single and multiple conditions on DataFrame columns using where function with Scala examples. The second signature will be used to provide SQL expressions to filter rows. To filter rows on DataFrame based on multiple conditions, you case use either Column with a condition or SQL expression. When you want to filter rows from DataFrame based on value present in an array collection column , you can use the first syntax. If your DataFrame consists of nested struct columns , you can use any of the above syntaxes to filter the rows based on the nested column.

Pyspark where

In this example, we filter data based on a specific condition:. Here, we filter data for individuals aged between 25 and 30 using the between SQL function. Filtering based on date and timestamp columns is a common scenario in data processing. In this example, we filter events that occurred after a specific date:. In this example, we filter data based on a UDF that checks if a name contains a vowel:. In this example, we filter JSON data based on a specific field:. You can also filter data based on aggregated values. In this example, we filter employees based on their average salary:.

Apartments for rent east atlanta

If you do not have cluster control privileges, you can still complete most of the following steps as long as you have access to a cluster. This tutorial shows you how to load and transform U. Suggest changes. Follow Naveen LinkedIn and Medium. Campus Experiences. Additional Information. You can use spark. Last Updated : 28 Mar, Checks whether the value contains the character or not. Similar Reads. You can either save your DataFrame to a table or write the DataFrame to a file or multiple files. Create a subset DataFrame with the ten cities with the highest population and display the resulting data. Change Language.

SparkSession pyspark.

Discover the five most populous cities in your data set by filtering rows, using. Checks whether the value ends with the given character. Filter rows in a DataFrame Discover the five most populous cities in your data set by filtering rows, using. They are used interchangeably, and both of them essentially perform the same operation. Python PySpark - DataFrame filter on multiple columns. Please go through our recently updated Improvement Guidelines before submitting any improvements. Where is a method used to filter the rows from DataFrame based on the given condition. You can suggest the changes for now and it will be under the article's discussion tab. Add Other Experiences. Report issue Report. Examples explained here are also available at PySpark examples GitHub project for reference. Subset or Filter data with multiple conditions in PySpark. Improve Improve.

In it something is. Thanks for an explanation. All ingenious is simple.

Many thanks.

I apologise, but you could not paint little bit more in detail.