Sagemaker pytorch

With MMEs, sagemaker pytorch, you can host multiple models on a single serving container and host sagemaker pytorch the models behind a single endpoint. The SageMaker platform automatically manages the loading and unloading of models and scales resources based on traffic patterns, reducing the operational burden of managing a large quantity of models. This feature is particularly beneficial for deep learning and generative AI models that require accelerated compute.

Module API. Hugging Face Transformers also provides Trainer and pretrained model classes for PyTorch to help reduce the effort for configuring natural language processing NLP models. The dynamic input shape can trigger recompilation of the model and might increase total training time. For more information about padding options of the Transformers tokenizers, see Padding and truncation in the Hugging Face Transformers documentation. SageMaker Training Compiler automatically compiles your Trainer model if you enable it through the estimator class. You don't need to change your code when you use the transformers.

Sagemaker pytorch

.

JSONDeserializer .

.

GAN is a generative ML model that is widely used in advertising, games, entertainment, media, pharmaceuticals, and other industries. You can use it to create fictional characters and scenes, simulate facial aging, change image styles, produce chemical formulas synthetic data, and more. For example, the following images show the effect of picture-to-picture conversion. The following images show the effect of synthesizing scenery based on semantic layout. We also introduce a use case of one of the hottest GAN applications in the synthetic data generation area. We hope this gives you a tangible sense on how GAN is used in real-life scenarios.

Sagemaker pytorch

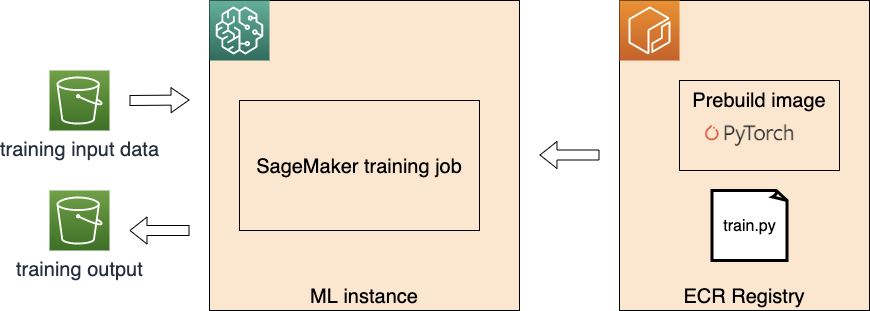

Starting today, you can easily train and deploy your PyTorch deep learning models in Amazon SageMaker. Just like with those frameworks, now you can write your PyTorch script like you normally would and rely on Amazon SageMaker training to handle setting up the distributed training cluster, transferring data, and even hyperparameter tuning. On the inference side, Amazon SageMaker provides a managed, highly available, online endpoint that can be automatically scaled up as needed. Supporting many deep learning frameworks is important to developers, since each of the deep learning frameworks has unique areas of strength. PyTorch is a framework used heavily by deep learning researchers, but it is also rapidly gaining popularity among developers due its flexibility and ease of use. TensorFlow is well established and continues to add great features with each release.

Inception actors

Language-guided editing is a common cross-industry generative AI use case. They are deployed on one g5. Adam with the following:. The example we shared illustrates how we can use resource sharing and simplified model management with SageMaker MMEs while still utilizing TorchServe as our model serving stack. He focuses on core challenges related to deploying complex ML applications, multi-tenant ML models, cost optimizations, and making deployment of deep learning models more accessible. This action sends the pixel coordinates and the original image to a generative AI model, which generates a segmentation mask for the object. In such case, the printing of lazy tensors should be wrapped using xm. SageMaker Training Compiler automatically compiles your Trainer model if you enable it through the estimator class. Here are how these models been utilized in the user experience workflow:. The following table summarizes the differences between single-model and multi-model endpoints for this example. It loads the pre-trained model checkpoints and applies the preprocess and postprocess methods to the input and output data. The following table shows the GPU memory usage of the three models in this post. This S3 location is where SageMaker will dynamically load the models base on invocation patterns.

Module API. Hugging Face Transformers also provides Trainer and pretrained model classes for PyTorch to help reduce the effort for configuring natural language processing NLP models. The dynamic input shape can trigger recompilation of the model and might increase total training time.

If you've got a moment, please tell us how we can make the documentation better. ParallelLoader , or when you explicitly request the value of a tensor such as by calling loss. We follow the same four-step process to prepare each model. Hugging Face Transformers also provides Trainer and pretrained model classes for PyTorch to help reduce the effort for configuring natural language processing NLP models. The file defines the configuration of the model server, such as number of workers and batch size. Outside of work, Ankith is also an electronic dance music producer. Ideally, only one compilation-and-execution is necessary per training iteration and is initiated automatically by pl. See the following code:. If you're not using AMP, replace optimizer. SageMaker Training Compiler automatically compiles your Trainer model if you enable it through the estimator class. SageMaker Training Compiler uses an alternate mechanism for launching a distributed training job, and you don't need to make any modification in your training script. For a complete list of parameters, refer to the GitHub repo.

It is simply excellent idea