Torch cuda

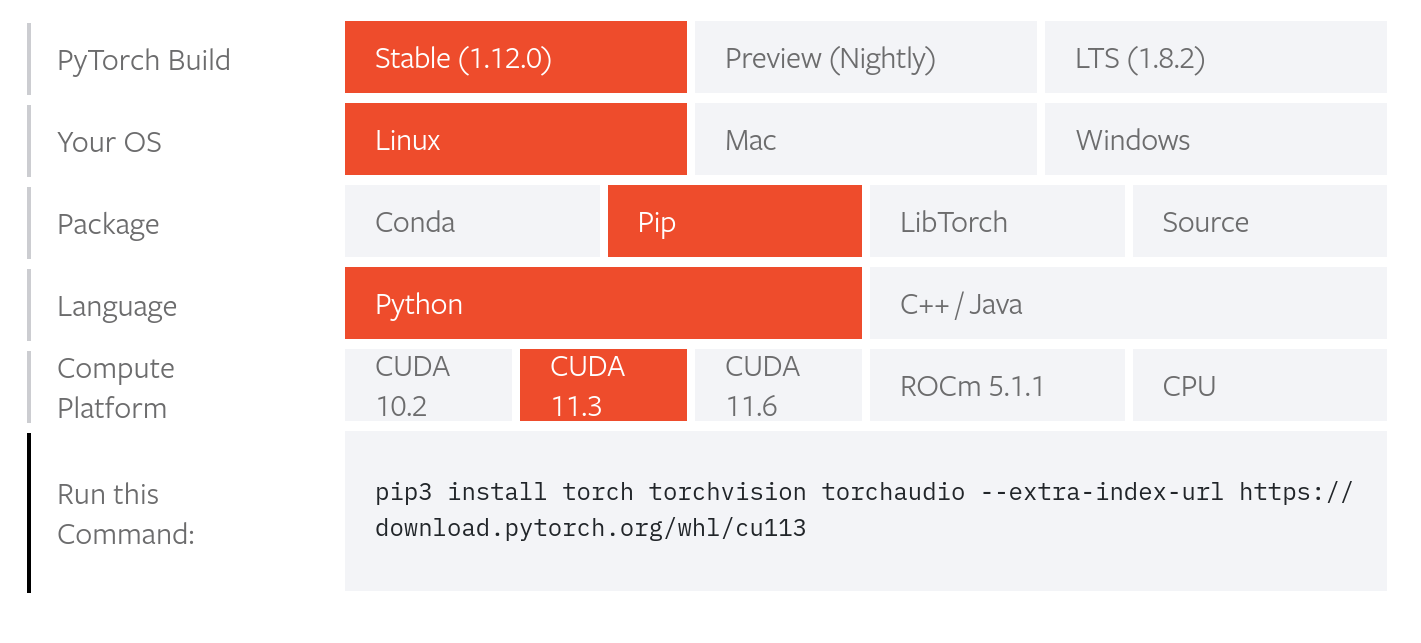

PyTorch jest paczką oprogramowania ogólnego przeznaczenia, do użycia w skryptach napisanych w języku Python. Jej głównym zastosowaniem jest tworzenie modeli uczenia maszynowego oraz ich uruchamianie na zasobach sprzętowych. Żeby wykonać torch cuda przykłady, należy uruchomić zadanie dodając polecenia do kolejki w SLURM wykonując polecenie sbatch job, torch cuda.

Released: May 23, View statistics for this project via Libraries. Maintainer: Adam Jan Kaczmarek. FoXAI simplifies the application of e X plainable AI algorithms to explain the performance of neural network models during training. The library acts as an aggregator of existing libraries with implementations of various XAI algorithms and seeks to facilitate and popularize their use in machine learning projects.

Torch cuda

At the end of the model training, it will be is saved in PyTorch format. To be able to retrieve and use the ONNX model at the end of training, you need to create an empty bucket to store it. You can create the bucket that will store your ONNX model at the end of the training. Select the container type and the region that match your needs. To follow this part, make sure you have installed the ovhai CLI on your computer or on an instance. As in the Control Panel, you will have to specify the region and the name cnn-model-onnx of your bucket. Create your Object Storage bucket as follows:. To train the model, we will use AI Training. This powerful tool will allow you to train your AI models from your own Docker images. Find the full Python code on our GitHub repository. Then, create a requirements. In our case we choose to start from a python

The dot.

An Ubuntu Projekt zrealizowałem w trakcie studiów w ramach pracy dyplomowej inżynierskiej. Celem projektu było napisanie modułu wykrywającego lokalizację przeszkód i ich wymiarów na podstawie skanu 3D z Lidaru. Add a description, image, and links to the cudnn-v7 topic page so that developers can more easily learn about it. Curate this topic. To associate your repository with the cudnn-v7 topic, visit your repo's landing page and select "manage topics. Learn more.

PyTorch is an open source machine learning framework that enables you to perform scientific and tensor computations. You can also use PyTorch for asynchronous execution. PyTorch is an open source, machine learning framework based on Python. It enables you to perform scientific and tensor computations with the aid of graphical processing units GPUs. You can use it to develop and train deep learning neural networks using automatic differentiation a calculation process that gives exact values in constant time. Also see our article reviewing the best GPUs for deep learning. It enables you to perform compute-intensive operations faster by parallelizing tasks across GPUs. After a tensor is allocated, you can perform operations with it and the results are also assigned to the same device. To launch operations across distributed tensors, you must first enable peer-to-peer memory access.

Torch cuda

The container also includes the following:. Release The CUDA driver's compatibility package only supports particular drivers. TensorRT 8. AMP enables users to try mixed precision training by adding only three lines of Python to an existing FP32 default script.

You know you like it

Search PyPI Search. Copy permalink. To separate runtime environments for different services and repositories, it is recommended to use a virtual Python environment. The assumption is that the poetry package is installed. ID ID Usage. Download the file for your platform. Updated Jan 23, Dockerfile. Limit to suite: [ buster ] [ buster-updates ] [ buster-backports ] [ bullseye ] [ bullseye-updates ] [ bullseye-backports ] [ bookworm ] [ bookworm-updates ] [ bookworm-backports ] [ trixie ] [ sid ] [ experimental ] Limit to a architecture: [ alpha ] [ amd64 ] [ arm ] [ arm64 ] [ armel ] [ armhf ] [ avr32 ] [ hppa ] [ hurd-i ] [ i ] [ ia64 ] [ kfreebsd-amd64 ] [ kfreebsd-i ] [ m68k ] [ mips ] [ mips64el ] [ mipsel ] [ powerpc ] [ powerpcspe ] [ ppc64 ] [ ppc64el ] [ riscv64 ] [ s ] [ sx ] [ sh4 ] [ sparc ] [ sparc64 ] [ x32 ] You have searched for packages that names contain cuda in all suites, all sections, and all architectures. To check options type:. Conv2d 20 , 50 , 5 , 1 self. Released: May 23, If you would like to install from the source you can build a wheel package using poetry.

Return the currently selected Stream for a given device. Return the default Stream for a given device. Return the percent of time over the past sample period during which global device memory was being read or written as given by nvidia-smi.

Command Line Interface Elementy. The library acts as an aggregator of existing libraries with implementations of various XAI algorithms and seeks to facilitate and popularize their use in machine learning projects. You could, for instance, build your image using buildx as follows:. Rozproszenie obliczeń na kilka kart GPU za pomocą klasy DataParallel : zawartość pliku job-multigpu-dp. Warning Some features may not work without JavaScript. You can launch the training specifying more or less GPU depending on the speed you want for your training. Następnie wyniki są zestawiane na wskazanym urządzeniu domyślnym. PyTorch PyTorch jest paczką oprogramowania ogólnego przeznaczenia, do użycia w skryptach napisanych w języku Python. Size [2, ] Model output size torch. Public Cloud. Przejdź na przeglądarkę Microsoft Edge, aby korzystać z najnowszych funkcji, aktualizacji zabezpieczeń i pomocy technicznej.

In it something is. Many thanks for the help in this question, now I will know.