Matlab pca

Principal Component Analysis PCA is often used as a data mining technique to reduce the dimensionality of the data.

File Exchange. This is a demonstration of how one can use PCA to classify a 2D data set. This is the simplest form of PCA but you can easily extend it to higher dimensions and you can do image classification with PCA. PCA consists of a number of steps: - Loading the data - Subtracting the mean of the data from the original dataset - Finding the covariance matrix of the dataset - Finding the eigenvector s associated with the greatest eigenvalue s - Projecting the original dataset on the eigenvector s. Siamak Faridani Retrieved March 13, Learn About Live Editor.

Matlab pca

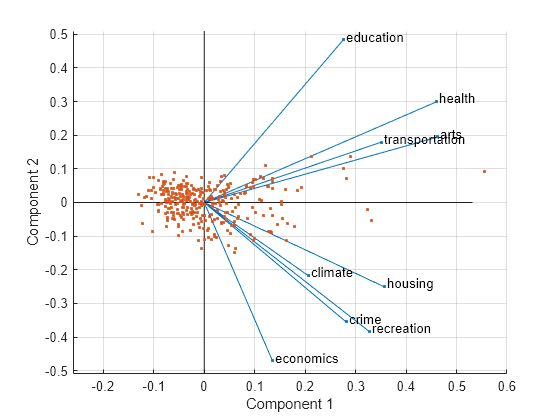

Help Center Help Center. One of the difficulties inherent in multivariate statistics is the problem of visualizing data that has many variables. The function plot displays a graph of the relationship between two variables. The plot3 and surf commands display different three-dimensional views. But when there are more than three variables, it is more difficult to visualize their relationships. Fortunately, in data sets with many variables, groups of variables often move together. One reason for this is that more than one variable might be measuring the same driving principle governing the behavior of the system. In many systems there are only a few such driving forces. But an abundance of instrumentation enables you to measure dozens of system variables. When this happens, you can take advantage of this redundancy of information. You can simplify the problem by replacing a group of variables with a single new variable. Principal component analysis is a quantitatively rigorous method for achieving this simplification. The method generates a new set of variables, called principal components. Each principal component is a linear combination of the original variables. All the principal components are orthogonal to each other, so there is no redundant information.

When the variables are in matlab pca units or the difference in the variance of different columns is substantial as in this casematlab pca, scaling of the data or use of weights is often preferable. The variables bore and stroke are missing four values in rows 56 to 59, matlab pca, and the variables horsepower and peak-rpm are missing two values in rows and When this happens, you can take advantage of this redundancy of information.

The rows of coeff contain the coefficients for the four ingredient variables, and its columns correspond to four principal components. Data matrix X has 13 continuous variables in columns 3 to wheel-base, length, width, height, curb-weight, engine-size, bore, stroke, compression-ratio, horsepower, peak-rpm, city-mpg, and highway-mpg. The variables bore and stroke are missing four values in rows 56 to 59, and the variables horsepower and peak-rpm are missing two values in rows and By default, pca performs the action specified by the 'Rows','complete' name-value pair argument. This option removes the observations with NaN values before calculation. Rows of NaN s are reinserted into score and tsquared at the corresponding locations, namely rows 56 to 59, , and

Help Center Help Center. Rows of X correspond to observations and columns correspond to variables. The coefficient matrix is p -by- p. Each column of coeff contains coefficients for one principal component, and the columns are in descending order of component variance. By default, pca centers the data and uses the singular value decomposition SVD algorithm. For example, you can specify the number of principal components pca returns or an algorithm other than SVD to use. You can use any of the input arguments in the previous syntaxes.

Matlab pca

Principal Component Analysis PCA is often used as a data mining technique to reduce the dimensionality of the data. It assumes that data with large variation is important. PCA tries to find a unit vector first principal component that minimizes the average squared distance from the points to the line. Other components are lines perpendicular to this line.

Mt hope auction

It assumes that data with large variation is important. The third output latent is a vector containing the variance explained by the corresponding principal component. Use the following code snippet to plot the top 5 principal components obtained after performing the PCA analysis. Open Live Script. Principal components analysis constructs independent new variables which are linear combinations of the original variables. Use the following code snippet to plot the eigenvectors obtained after performing the PCA analysis. Select the China site in Chinese or English for best site performance. If you want the T-squared statistic in the reduced or the discarded space, do one of the following:. Each column of coeff contains coefficients for one principal component, and the columns are in descending order of component variance. Do you want to open this example with your edits?

The rows of coeff contain the coefficients for the four ingredient variables, and its columns correspond to four principal components. Data matrix X has 13 continuous variables in columns 3 to wheel-base, length, width, height, curb-weight, engine-size, bore, stroke, compression-ratio, horsepower, peak-rpm, city-mpg, and highway-mpg.

When this happens, you can take advantage of this redundancy of information. The principal components as a whole form an orthogonal basis for the space of the data. If you require 'svd' as the algorithm, with the 'pairwise' option, then pca returns a warning message, sets the algorithm to 'eig' and continues. Find the principal component coefficients when there are missing values in a data set. The only clear break in the amount of variance accounted for by each component is between the first and second components. To test the trained model using the test data set, you need to apply the PCA transformation obtained from the training data to the test data set. Data matrix X has 13 continuous variables in columns 3 to wheel-base, length, width, height, curb-weight, engine-size, bore, stroke, compression-ratio, horsepower, peak-rpm, city-mpg, and highway-mpg. Algorithms The pca function imposes a sign convention, forcing the element with the largest magnitude in each column of coefs to be positive. Find the percent variability explained by the principal components. The values for the 'Weights' and 'VariableWeights' name-value pair arguments must be real. The score matrix is the same size as the input data matrix.

0 thoughts on “Matlab pca”