Microsoft tts

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support. In this article, you learn about authorization options, query options, how to structure a request, and how to interpret a response. Use it only in cases where microsoft tts can't use the Speech SDK, microsoft tts. For example, with the Speech SDK you can subscribe to events microsoft tts more insights about the text to speech processing and results.

Trusted by individuals and teams of all sizes. Top-rated on Trustpilot, G2, and AppSumo. The service team was exceptional and was very helpful in supporting my business needs. Would definitely use it again if needed! The interface is clean, uncluttered, and super easy and intuitive to use. Having tried many others, PlayHT is my 1 favorite.

Microsoft tts

SpeechT5 was first released in this repository , original weights. The license used is MIT. Leveraging large-scale unlabeled speech and text data, we pre-train SpeechT5 to learn a unified-modal representation, hoping to improve the modeling capability for both speech and text. Extensive evaluations show the superiority of the proposed SpeechT5 framework on a wide variety of spoken language processing tasks, including automatic speech recognition, speech synthesis, speech translation, voice conversion, speech enhancement, and speaker identification. Refer to this Colab notebook for an example of how to fine-tune SpeechT5 for TTS on a different dataset or a new language. You can use this model for speech synthesis. See the model hub to look for fine-tuned versions on a task that interests you. Users both direct and downstream should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations. Carbon emissions can be estimated using the Machine Learning Impact calculator presented in Lacoste et al. Disclaimer: The team releasing SpeechT5 did not write a model card for this model so this model card has been written by the Hugging Face team. Direct Use You can use this model for speech synthesis. Training Details Training Data LibriTTS Training Procedure Preprocessing [optional] Leveraging large-scale unlabeled speech and text data, we pre-train SpeechT5 to learn a unified-modal representation, hoping to improve the modeling capability for both speech and text.

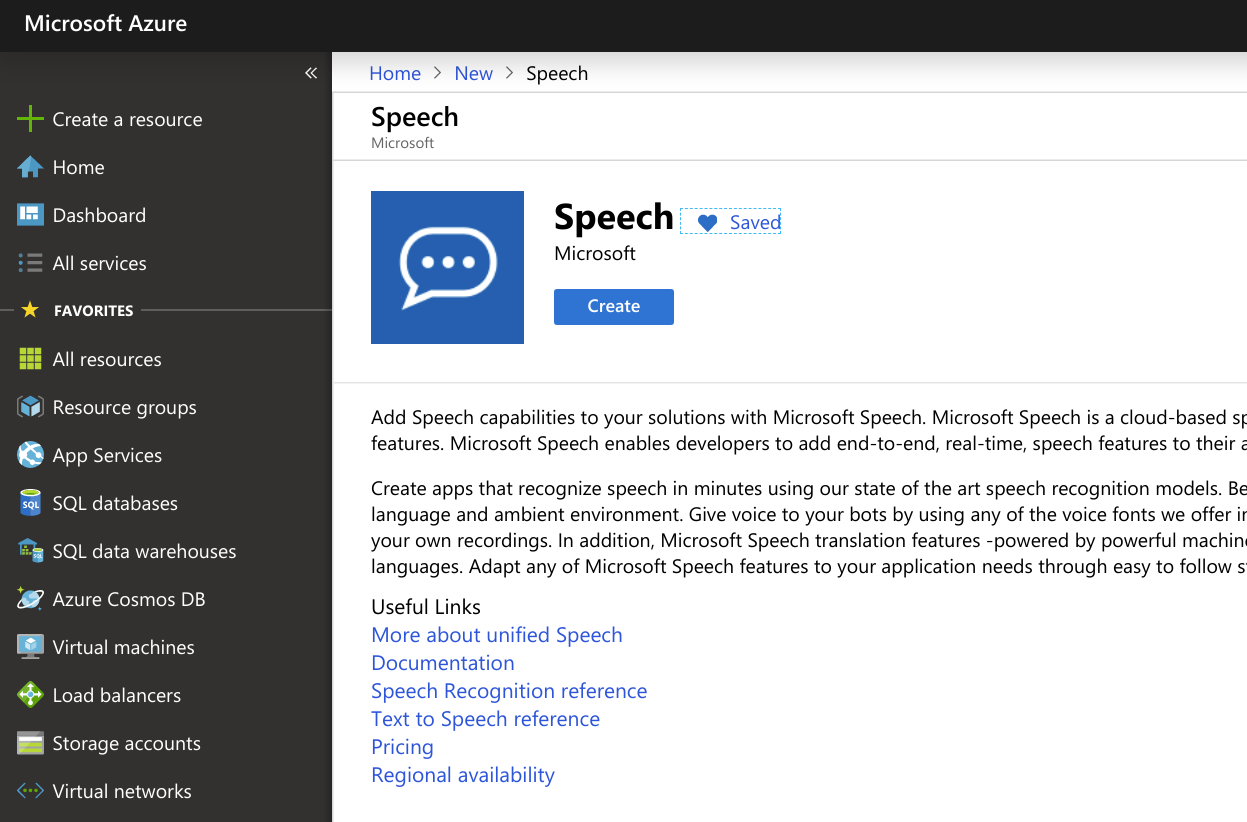

With our Microsoft Azure voice generator, you can type or import text and convert it into speech microsoft tts a matter of seconds. Preview the audio, change voice tones and pronunciations before converting your text to speech.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support. In this overview, you learn about the benefits and capabilities of the text to speech feature of the Speech service, which is part of Azure AI services. Text to speech enables your applications, tools, or devices to convert text into human like synthesized speech. The text to speech capability is also known as speech synthesis. Use human like prebuilt neural voices out of the box, or create a custom neural voice that's unique to your product or brand.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support. In this overview, you learn about the benefits and capabilities of the text to speech feature of the Speech service, which is part of Azure AI services. Text to speech enables your applications, tools, or devices to convert text into human like synthesized speech. The text to speech capability is also known as speech synthesis. Use human like prebuilt neural voices out of the box, or create a custom neural voice that's unique to your product or brand. For a full list of supported voices, languages, and locales, see Language and voice support for the Speech service.

Microsoft tts

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support. In this article, you learn about authorization options, query options, how to structure a request, and how to interpret a response. Use it only in cases where you can't use the Speech SDK. For example, with the Speech SDK you can subscribe to events for more insights about the text to speech processing and results. Each available endpoint is associated with a region. A Speech resource key for the endpoint or region that you plan to use is required. Here are links to more information:. Costs vary for prebuilt neural voices called Neural on the pricing page and custom neural voices called Custom Neural on the pricing page. For more information, see Speech service pricing.

60f in celsius

Create an Azure account and Speech service subscription, and then use the Speech SDK or visit the Speech Studio portal and select prebuilt neural voices to get started. Would definitely use it again if needed! Before you use the text to speech REST API, understand that you need to complete a token exchange as part of authentication to access the service. When you use the text to speech feature, you're billed for each character that's converted to speech, including punctuation. Don't waste your time in Polly, Azure, or Cloud; this is your text-to-voice software. Typically, when training a voice model, two computing tasks are running in parallel. I believe this is going to help me stand out a bit from my peers. All it takes to get started is a handful of audio files and the associated transcriptions. By using viseme events in Speech SDK, you can generate facial animation data. If your selected voice and output format have different bit rates, the audio is resampled as necessary. Additional resources In this article.

Android's customization is one of its key strengths, allowing you to personalize the OS as you like.

If the endpoint is newly created or suspended during the day, it's billed for its accumulated running time until UTC the second day. This example is currently set to West US. Custom neural voice called Custom Neural on the pricing page. Environmental Impact Carbon emissions can be estimated using the Machine Learning Impact calculator presented in Lacoste et al. Preview audio. PlayHT was easy for me to use and add to my website. Each prebuilt neural voice model is available at 24 kHz and high-fidelity 48 kHz. So, the calculated compute hours are longer than the actual training time. Note If you select 48kHz output format, the high-fidelity voice model with 48kHz will be invoked accordingly. The expectation is that requests are sent asynchronously, responses are polled for, and synthesized audio is downloaded when the service makes it available. For more information, see Get started with custom neural voice. Table of contents Exit focus mode. Here's more information about neural text to speech features in the Speech service, and how they overcome the limits of traditional text to speech systems:. Table of contents. For more information, see Speech service pricing.

Excuse, that I interrupt you, but you could not paint little bit more in detail.

Should you tell.

In my opinion you are not right. I can prove it.